This article is reproduced from Medium.com

For researchers in online disinformation and information operations, it’s been an interesting week. On Wednesday, Twitter released an archive of tweets shared by accounts from the Internet Research Agency (IRA), an organization in St. Petersburg, Russia, with alleged ties to the Russian government’s intelligence apparatus. This data archive provides a new window into Russia’s recent “information operations.” On Friday, the U.S. Department of Justice filed charges against a Russian citizen for her role in ongoing operations and provided new details about their strategies and goals.

Information operations exploit information systems (like social media platforms) to manipulate audiences for strategic, political goals—in this case, one of the goals was to influence the U.S. election in 2016.

In our lab at the University of Washington (UW), we’ve been accidentally studying these information operations since early 2016. These recent developments offer new context for our research and, in many ways, confirm what we thought we were seeing—at the intersection of information operations and political discourse in the United States—from a very different view.

A few years ago, UW PhD student Leo Stewart initiated a project to study online conversations around the #BlackLivesMatter movement. This research grew to become a collaborative project that included PhD student Ahmer Arif, iSchool assistant professor Emma Spiro, and me. As the research evolved, we began to focus on “framing contests” within what turned out to be a very politicized online conversation.

Framing can be a powerful political tool.

The concept of framing has interesting roots and competing definitions (see Goffman, Entman, Benford and Snow). In simple terms, a frame is a way of seeing and understanding the world that helps us interpret new information. Each of us has a set of frames we use to make sense of what we see, hear, and experience. Frames exist within individuals, but they can also be shared. Framing is the process of shaping other people’s frames, guiding how other people interpret new information. We can talk about the activity of framing as it takes place in classrooms, through news broadcasts, political ads, or a conversation with a friend helping you understand why it’s so important to vote. Framing can be a powerful political tool.

Framing contests occur when two (or more) groups attempt to promote different frames—for example, in relation to a specific historical event or emerging social problem. Think about the recent images of the group of Central American migrants trying to cross the border into Mexico. One framing for these images sees these people as refugees trying to escape poverty and violence and describes their coordinated movement (in the “caravan”) as a method for ensuring their safety as they travel hundreds of miles in hopes of a better life. A competing framing sees this caravan as a chaotic group of foreign invaders, “including many criminals,” marching toward the United States (due to weak immigration laws created by Democrats), where they will cause economic damage and perpetrate violence. These are two distinct frames and we can see how people with political motives are working to refine, highlight, and spread their frame and to undermine or drown out the other frame.

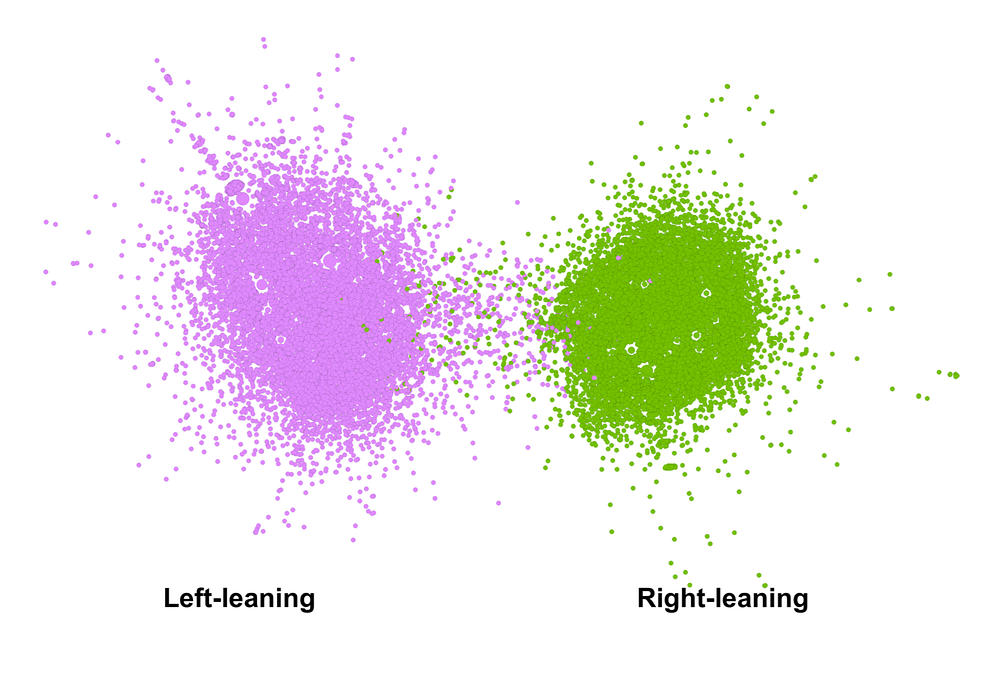

In 2017, we published a paper examining framing contests on Twitter related to a subset of #BlackLivesMatter conversations that took place around shooting events in 2016. In that work, we first took a meta-level view of more than 66,000 tweets and 8,500 accounts that were highly active in that conversation, creating a network graph (below) based on a “shared audience” metric that allowed us to group accounts together based on having similar sets of followers.

That graph revealed that, structurally, the #BlackLivesMatter Twitter conversation had two distinct clusters or communities of accounts—one on the political “left” that was supportive of #BlackLivesMatter and one on the political “right” that was critical of #BlackLivesMatter.

Next, we conducted qualitative analysis of the different content that was being shared by accounts on the two different sides of the conversation. Content, for example, like these tweets (from the left side of the graph):

Tweet: Cops called elderly Black man the n-word before shooting him to death #KillerCops #BlackLivesMatter

Tweet: WHERE’S ALL THE #BlueLivesMatter PEOPLE?? 2 POLICE OFFICERS SHOT BY 2 WHITE MEN, BOTH SHOOTERS IN CUSTODY NOT DEAD.

And these tweets (from the right side of the graph):

Tweet: Nothing Says #BlackLivesMatter like mass looting convenience stores & shooting ppl over the death of an armed thug.

Tweet: What is this world coming to when you can’t aim a gun at some cops without them shooting you? #BlackLivesMatter.

In these tweets, you can see the kinds of “framing contests” that were taking place. On the left, content coalesced around frames that highlighted cases where African-Americans were victims of police violence, characterizing this as a form of systemic racism and ongoing injustice. On the right, content supported frames that highlighted violence within the African-American community, implicitly arguing that police were acting reasonably in using violence. You can also see how the content on the right attempts to explicitly counter and undermine the #BlackLivesMatter movement and its frames—and, in turn, how content from the left reacts to and attempts to contest the counter-frames from the right.

Our research surfaced several interesting findings about the structure of the two distinct clusters and the nature of “grassroots” activism shaping both sides of the conversation. But at a high level, two of our main takeaways were how divided those two communities were and how toxic much of the content was.

Our initial paper was accepted for publication in autumn 2017, and we finished the final version in early October. Then things got interesting.

A few weeks later, in November 2017, the House Intelligence Committee released a list of accounts, given to them by Twitter, that were found to be associated with Russia’s Internet Research Agency (IRA) and their influence campaign targeting the 2016 U.S. election. The activities of these accounts—the information operations that they were part of—had been occurring at the same time as the politicized conversations we had been studying so closely.

Looking over the list, we recognized several account names. We decided to cross-check the list of accounts with the accounts in our #BlackLivesMatter dataset. Indeed, dozens of the accounts in the list appeared in our data. Some—like @Crystal1Johnson and @TEN_GOP—were among the most retweeted accounts in our analysis. And some of the tweet examples we featured in our earlier paper, including some of the most problematic tweets, were not posted by “real” #BlackLivesMatter or #BlueLivesMatter activists, but by IRA accounts.

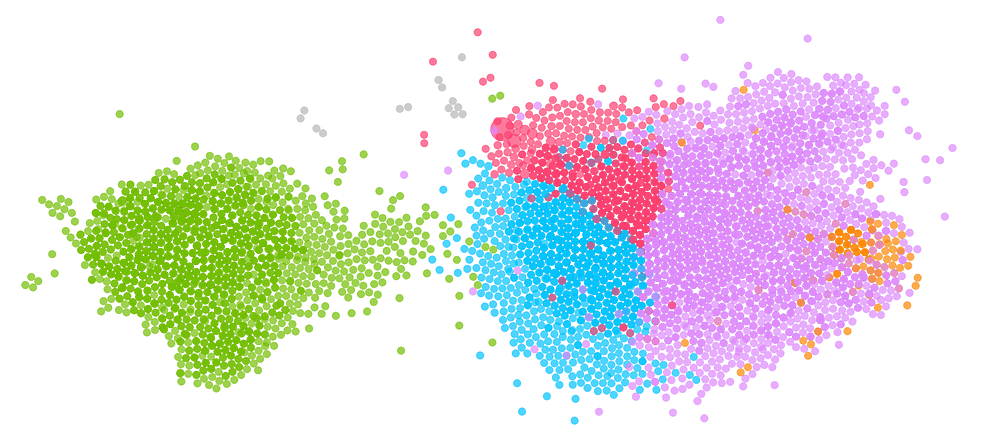

To get a better view of how IRA accounts participated in the #BlackLivesMatter Twitter conversation, we created another network graph (below) using retweet patterns from the accounts. Similar to the graph above, we saw two different clusters of accounts that tended to retweet other accounts in their cluster, but not accounts in the other cluster. Again, there was a cluster of accounts (on the left, in magenta) that was pro-BlackLivesMatter and liberal/Democrat and a cluster (on the right, in green) that was anti-BlackLivesMatter and conservative/Republican.

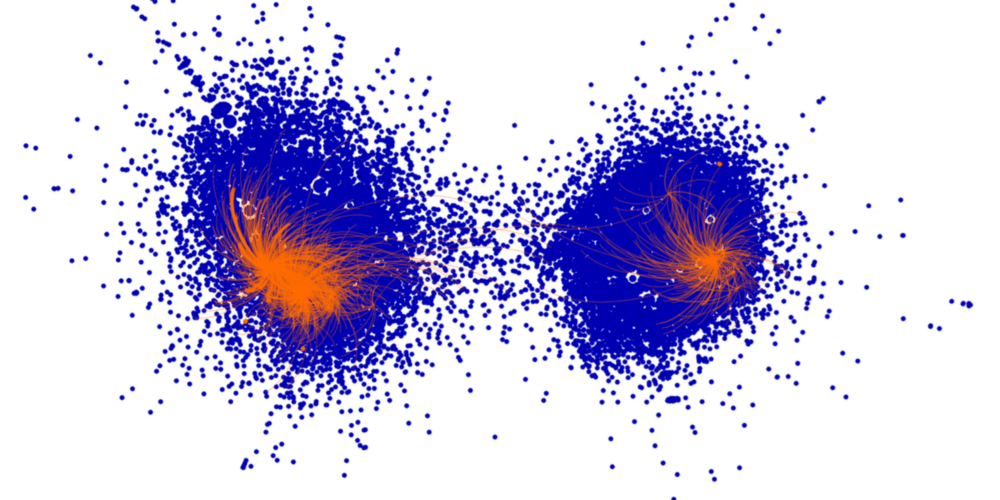

Next, we identified and highlighted the accounts identified as part of the IRA’s information operations. That graph—in all its creepy glory—is below, with the IRA accounts in orange and other accounts in blue.

As you can see, the IRA accounts impersonated activists on both sides of the conversation. On the left were IRA accounts like @Crystal1Johnson, @gloed_up, and @BleepThePolice that enacted the personas of African-American activists supporting #BlackLivesMatter. On the right were IRA accounts like @TEN_GOP, @USA_Gunslinger, and @SouthLoneStar that pretended to be conservative U.S. citizens or political groups critical of the #BlackLivesMatter movement.

Ahmer Arif conducted a deep qualitative analysis of the IRA accounts active in this conversation, studying their profiles and tweets to understand how they carefully crafted and maintained their personas. Among other observations, Arif described how, as a left-leaning person who supports #BlackLivesMatter, it was easy to problematize much of the content from the accounts on the “right” side of the graph: Some of that content, which included racist and explicitly anti-immigrant statements and images, was profoundly disturbing. But in some ways, he was more troubled by his reaction to the IRA content from the left side of the graph, content that often aligned with his own frames. At times, this content left him feeling doubtful about whether it was really propaganda after all.

This underscores the power and nuance of these strategies. These IRA agents were enacting caricatures of politically active U.S. citizens. In some cases, these were gross caricatures of the worst kinds of online actors, using the most toxic rhetoric. But, in other cases, these accounts appeared to be everyday people like us, people who care about the things we care about, people who want the things we want, people who share our values and frames. These suggest two different aspects of these information operations.

First, these information operations are targeting us within our online communities, the places we go to have our voices heard, to make social connections, to organize political action. They are infiltrating these communities by acting like other members of the community, developing trust, gathering audiences. Second, these operations begin to take advantage of that trust for different goals, to shape those communities toward the strategic goals of the operators (in this case, the Russian government).

One of these goals is to “sow division,” to put pressure on the fault lines in our society. A divided society that turns against itself, that cannot come together and find common ground, is one that is easily manipulated. Look at how the orange accounts in the graph (Figure 3) are at the outside of the clusters; perhaps you can imagine them literally pulling the two communities further apart. Russian agents did not create political division in the United States, but they were working to encourage it.

That IRA accounts sent messages supporting #BlackLivesMatter does not mean that ending racial injustice in the United States aligns with Russia’s strategic goals or that #BlackLivesMatter is an arm of the Russian government.

Their second goal is to shape these communities toward their other strategic aims. Not surprisingly, considering what we now know about their 2016 strategy, the IRA accounts on the right in this graph converged in support of Donald Trump. Their activity on the left is more interesting. As we discussed in our previous paper (written before we knew about the IRA activities), the accounts in the pro-#BlackLivesMatter cluster were harshly divided in sentiment about Hillary Clinton and the 2016 election. When we look specifically at the IRA accounts on the left, they were consistently critical of Hillary Clinton, highlighting previous statements of hers they perceived to be racist and encouraging otherwise left-leaning people not to vote for her. Therefore, we can see the IRA accounts using two different strategies on the different sides of the graph, but with the same goal (of electing Donald Trump).

The #BlackLivesMatter conversation isn’t the only political conversation the IRA targeted. With the new data provided by Twitter, we can see there were several conversational communities they participated in, from gun rights to immigration issues to vaccine debates. Stepping back and keeping these views of the data in mind, we need to be careful, both in the case of #BlackLivesMatter and these other public issues, to resist the temptation to say that because these movements or communities were targeted by Russian information operations, they are therefore illegitimate. That IRA accounts sent messages supporting #BlackLivesMatter does not mean that ending racial injustice in the United States aligns with Russia’s strategic goals or that #BlackLivesMatter is an arm of the Russian government. (IRA agents also sent messages saying the exact opposite, so we can assume they are ambivalent at most).

If you accept this, then you should also be able to think similarly about the IRA activities supporting gun rights and ending illegal immigration in the United States. Russia likely does not care about most domestic issues in the United States. Their participation in these conversations has a different set of goals: to undermine the U.S. by dividing us, to erode our trust in democracy (and other institutions), and to support specific political outcomes that weaken our strategic positions and strengthen theirs. Those are the goals of their information operations.

One of the most amazing things about the internet age is how it allows us to come together—with people next door, across the country, and around the world—and work together toward shared causes. We’ve seen the positive aspects of this with digital volunteerism during disasters and online political activism during events like the Arab Spring. But some of the same mechanisms that make online organizing so powerful also make us particularly vulnerable, in these spaces, to tactics like those the IRA are using.

Passing along recommendations from Arif, if we could leave readers with one goal, it’s to become more reflective about how we engage with information online (and elsewhere), to tune in to how this information affects us (emotionally), and to consider how the people who seek to manipulate us (for example, by shaping our frames) are not merely yelling at us from the “other side” of these political divides, but are increasingly trying to cultivate and shape us from within our own communities.